As Artificial Intelligence continues to gain traction, a wide variety of new tools and services are being made available across different industries. The Internet of Medical Things (IoMT) opens up a wealth of possibilities for utilizing AI, particularly in solving problems related to privacy and data protection. Additionally, AI-enabled solutions provide physicians with the ability to quickly and accurately diagnose patients. A diverse range of companies, from startups to well-established firms, are adopting AI-centric strategies. A key trend in AI technology for businesses in 2022 is the emergence of no-code AI platforms. These platforms enable smaller businesses to swiftly incorporate sophisticated technological functionalities into their products, dramatically reducing the time required for developing and maintaining AI infrastructures by over 90%. Another area where AI is making significant strides is in customer service, with the widespread adoption of AI chatbot technology. These chatbots are able to provide immediate assistance to customers, improving overall satisfaction and reducing the need for human intervention. As businesses increasingly seek to provide 24/7 support, AI chatbot technology is becoming an indispensable tool for streamlining customer interactions and solving inquiries in real-time. The integration of AI chatbot technology into existing customer service systems is expected to become a standard practice across industries in the coming years.

AlphaFold is a new AI tool

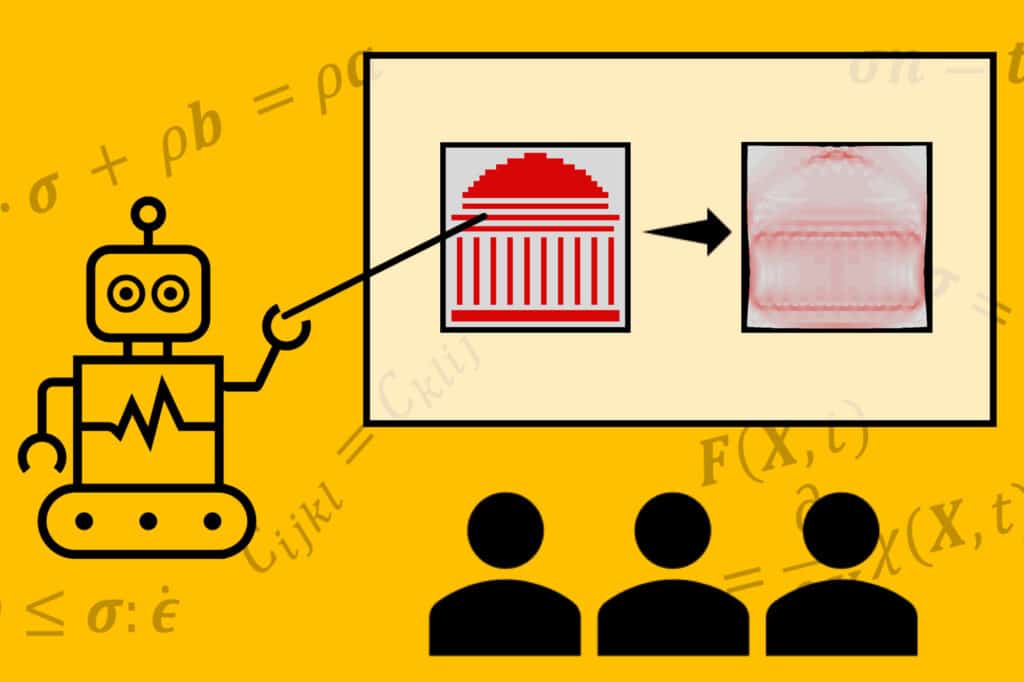

AlphaFold is an AI tool that predicts the three-dimensional structure of proteins. It was developed by DeepMind and is based on an AI model inspired by the human brain. The system uses a large amount of input data and uses hidden networks to predict the desired output. The algorithm feeds protein sequences as input. The predicted three-dimensional structures are then generated from the sequences.

Using data provided by scientists, AlphaFold can predict individual protein structures. However, it is not completely accurate and can trip up on edge cases. The tool is limited to individual proteins and does not have a comprehensive knowledge of protein-DNA interactions or protein-small-molecule interactions. Researchers hope that AlphaFold will lead to breakthroughs in biomedicine.

The most difficult part of making accurate predictions of protein structures is figuring out how individual proteins interact with other cellular players. AlphaFold was able to predict the independent folding units of a protein in a CASP competition, but many challenges remain. For instance, the human proteome is a complex mixture of many proteins, which have multiple domains.

AlphaFold is a multimer model published as open-source software by DeepMind. The software is based on their Colab notebook and is available in Python. Users can view structure predictions, including PAE, as an image. The raw data can also be downloaded as a JSON file. However, the custom format is not supported by existing software, so users are required to use Python to plot and analyse the data.

DeepMind’s Gato is a general-purpose AI system

The Gato general-purpose AI system was trained on a variety of datasets, including images and natural language sentences. It has the capacity to handle billions of words and images and is able to perform a variety of tasks. It can even manipulate physical objects and can converse with humans, albeit in limited ways. Gato was developed by researchers at DeepMind and has been used for several real-world tasks.

This model is a good first step, but it still needs work to achieve true general-purpose AI. A general-purpose AI system should be able to perform a wide variety of tasks, without the need for heavy hard-coding. Gato’s ‘context window’ limit is one of its biggest weaknesses. This ‘context window’ is the amount of information that a system can remember at one time. That’s why Transformer-based language models are not yet ready to write a book or an essay, because they tend to forget the most important details. This ‘forgetting’ problem is a common problem in machine learning and is a known Achilles heel of the technology.

The Gato AI system was tested on a variety of control tasks and its averages were compared with specialist agents. This gave Gato an opportunity to learn how to perform a variety of tasks and identify commonalities between them. The system performed okay overall in the tests.

Uber’s self-driving car crash

An investigation of Uber’s self-driving car’s deadly crash shows that the system failed to identify a pedestrian, bicycle, and jaywalker in its path, and failed to prevent the collision. Despite several attempts to detect Herzberg, the car was unable to avoid crashing into her.

Since the crash, Uber has halted its self-driving car testing program. The incident occurred near Tempe, Arizona, and was believed to be the first pedestrian fatality involving self-driving technology. It also raised questions about liability and the level of safety precautions required in an autonomous vehicle. As a result, Uber suspended its self-driving car testing program, but has now resumed testing at a reduced speed and with more restrictions. As of March 2018, there are no specific laws that govern the responsibility of companies involved in autonomous car accidents.

Uber is under fire for failing to properly train its drivers and implement its safety plan. The company also failed to enforce its anti-cell phone policy and failed to monitor its drivers’ behavior. The company should also make sure their vehicles are vetted by independent third parties to ensure their safety.

Uber’s self-driving car crashed into a pedestrian on March 18, causing her death. While Uber has not publicly released the details of the accident, the details surrounding the crash are important for the public. The crash occurred when the self-driving car failed to stop before it struck Herzberg.

MIT’s Task Force on the Work of the Future report

The MIT Task Force on the Work of the Future report outlines a set of recommendations to make MIT more sustainable and responsive to the evolving needs of society. Among its recommendations are the inclusion of social equity in the curriculum, experiential learning, and professional academic advisors as well as faculty members. Moreover, it calls for better preparation for graduate careers and opportunities for postdoctoral researchers. The report also calls for increased collaboration and international activities.

The Task Force on the Work of the Future is a multidisciplinary initiative involving more than twenty students and faculty members at MIT. Its mission is to identify constructive pathways forward as technology continues to improve the world of work. The Task Force’s work is grounded in scientific evidence and deep expertise in technology. In addition, it makes reasonable assumptions about future scenarios and develops policy-relevant ideas and insights.

The report cites examples of AI in the workplace, including the development of robots that can help workers perform complex tasks. It includes field studies that back up the MIT Task Force’s conclusions. In this way, the MIT Task Force on the Work of the Future report counters the common doomsday perspective that AI will eliminate jobs. AI will transform the way people perform their jobs, not destroy them.

The Work of the Future report outlines a future where there is enough work for everyone. Despite the fact that the US economy’s labor force participation rate is declining, human labor will be needed for the transition to the new economy. Consequently, it is critical to ensure that people are trained for this new environment.

Uber’s autonomous vehicle

Uber isn’t the only company interested in developing autonomous vehicles. The company also has a strong interest in them because it gets 75 percent of the fare rather than the usual 25 percent, and eliminating the driver would mean higher revenues for the company. Its future plans for autonomous vehicles include allowing customers to request driverless cars on demand, and positioning these vehicles throughout the world.

Currently, the company’s modified Volvo XC90 is driven on public roads by human drivers who help train the autonomous vehicle algorithm. They drive the vehicle on a set of roads to simulate the “perfect drive” and record data that helps the software drive in the pre-mapped areas. This data helps the algorithms learn how to drive in the pre-programmed area.

Ultimately, the deployment of the AVs is dependent on the success of the test program. It’s likely that Uber will continue testing in controlled environments until it can prove that the technology is reliable enough to drive in the real world. In the meantime, the company will continue testing its autonomous vehicles in Pittsburgh.

Uber began working on developing autonomous vehicles around 2015 and partnered with Carnegie Mellon University’s National Robotics Center. It poached 40 scientists and engineers from the university and formed the Uber Advanced Technologies Group (UTG) to develop self-driving technology. It has also hired hundreds of employees to develop the technology.

Uber’s self-aware AI

AI is a powerful tool for solving problems. It helps us cluster customers and understand their needs. By using machine learning and other methods, AI can make our journeys better. For example, Uber uses AI to understand its drivers and predict where they will end up. It also uses AI to detect fraudulent rides, match riders and drivers, and optimize routes. It can even handle customer service incidents and route them to the most appropriate agents. These AI systems have increased Uber’s efficiency and customer satisfaction by 10 percent.

Uber is testing its self-aware AI in real streets. Its modified Volvo XC90 is driven by humans in order to map the area and simulate a “perfect drive.” The data from these “perfect drives” helps the software learn how to drive within the mapped area.

The autonomous cars have not reached Level 4 of autonomy, but Uber is working on it. It has also revealed its plans to buy up to 24,000 Volvo XC90 SUV taxis. These robo-taxis have taken over 50,000 passengers on over two million miles in pilot projects. However, the companies are still not sure if they will roll out these robo-taxis nationwide.

A recent fatal accident in Tempe, Arizona, has highlighted the risks of self-driving cars. A recent report in Commentary blamed the pedestrian while a preliminary National Transportation Safety Board report blamed Uber’s self-driving AI for the fatal crash.